The cover story of this month’s Communications of the ACM is a mostly technical paper called Protecting 3D Graphics Content. In it, Stanford graduate student David Koller and professor Mark Levoy describe a method for copy-protecting 3D graphical models such as the ones generated in the Stanford Digital Michelangelo Project. Most copy-restriction schemes are snake oil — they rely on a mythological “trusted client” that prevents the user from accessing the raw bits being displayed on his own monitor by his own CPU. The Stanford team has gotten around this problem for 3D models by keeping the high-resolution model on their own server and only sending 2D images to the client. The client uses a much lower resolution 3D model for the interface to choose new camera angles. The method sounds sound, though the authors admit it might still be possible to reconstruct the 3D model using machine-vision techniques on their 2D images.

Scholarly researchers are often faced with difficult ethical trade-offs, especially when developing new technology. The authors state their own particular quandary in the second paragraph:

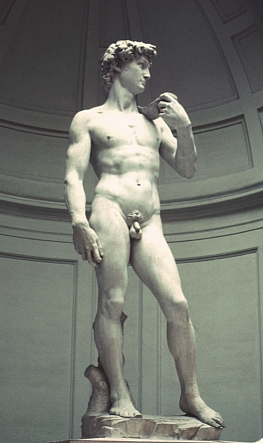

These statues represent the artistic patrimony of Italy’s cultural institutions, and our contract with the Italian authorities permits distribution of the 3D models only to established scholars for noncommercial use. Though everyone involved would like the models to be available for any constructive purpose, the digital 3D model of the David would quickly be pirated if it were distributed without protection: simulated marble replicas would be manufactured outside the provisions of the parties authorizing creation of the model.

In other words, as academics Koller and Levoy understand how the free sharing of history, art and scholarly data contributes to society as a whole, but they also recognize that without some assurance that this data is not shared freely, the authorities who control access to the original works won’t allow any sharing. The museum would also like to see the data shared with fellow researchers, but don’t want to see it used to make replicas without their approval and license fees. Unfortunately, I think Koller, Levoy and the museum all fall the wrong way on this question.

One of the things that jars me in reading this piece is the liberal sprinkling of the words “theft” and “piracy,” as in “For the digital representations of valuable 3D objects (such as cultural heritage artifacts), it is not sufficient to detect piracy after the fact; piracy must be prevented.” Here the authors are making a fundamentally false assumption. I cannot speak to Italian law, but under U.S. law (and thus for any viewer of the data in the U.S.) exact models of works that are in the public domain are not themselves copyrightable. To quote the 1999 decision by the US District Court SDNY in Bridgeman Art Library, LTD. v. Corel Corp.:

There is little doubt that many photographs, probably the overwhelming majority, reflect at least the modest amount of originality required for copyright protection. “Elements of originality . . . may include posing the subjects, lighting, angle, selection of film and camera, evoking the desired expression, and almost any other variant involved.” [n39] But “slavish copying,” although doubtless requiring technical skill and effort, does not qualify. [n40] As the Supreme Court indicated in Feist, “sweat of the brow” alone is not the “creative spark” which is the sine qua non of originality. [n41] It therefore is not entirely surprising that an attorney for the Museum of Modern Art, an entity with interests comparable to plaintiff’s and its clients, not long ago presented a paper acknowledging that a photograph of a two-dimensional public domain work of art “might not have enough originality to be eligible for its own copyright.” [n42]

What Koller and Levoy are protecting are not the museum’s property — the 3D models of David belong to the public at large. What they are protecting is a business model, one that is based on preventing the legitimate and legal sharing of information. Their opponents in this battle are neither thieves nor pirates, they are merely potential competitors for the museum’s gift shop, or customers the museum fears losing.

It is understandable that museums want to protect an income stream they’ve come to rely on to accomplish their mission. It is also understandable that Koller and Levoy are willing to help museums maintain their gate-keeper status in exchange for at least limited access to the treasures they hold. After all, isn’t partial access to the World’s greatest artwork in digital form better than no access at all?

In this case I fear the short-term gain will be outweighed by long-term loss. Information technology and policy is in a state of incredibly rapid flux, with new systems constantly building on top of what came before like a giant coral reef. This project takes us another step down the path of information gate-keepers and toll-road bandits, a path that rewards the hoarding of information and the blockade of communication rather than the promotion of the useful arts and sciences. It also reinforces the message that we are all cultural sharecroppers, that education and the arts are reserved for those with the money to pay for them, and that the public domain is just a myth that thieves tell themselves to assuage a guilty conscience. This is the exact opposite of what our universities and museums represent, and it undermines the project participants’ legitimate desire to share these treasures with the world. We can do better, and we should.

Update 6/21/05: A longer version of the CACM article (published in SIGGRAPH 2004) can be found here, and includes a video demonstration (Quicktime MPEG-4, 20MB).